I wrote this post almost six years ago (March 21, 2012), but it’s the gift that keeps on giving.

These days, I feel a lot like Bill Murray in Groundhog Day, where at least a few times a year, my inbox is stuffed with concerned individuals forwarding me a paper (or, much more the case, a story about a paper) implying red meat is going to send me to an early grave. The irony is that being stuck in some sort of sadistic red-meat time loop probably will do the trick. But at least I have meditation to help with that.

To be fair, the red-meat-studies are not the only culprit. As I mentioned in a Nerd Safari on epidemiology, John Ioannidis and Jonathan Schoenfeld picked 50 ingredients at random out of a cookbook and determined if each was associated with cancer. They found that at least one study was identified as showing an association for an increase or decrease in cancer for 40 out of the 50 ingredients. (The 10 that didn’t make the list were more “obscure,” as the authors put it: bay leaf, cloves, thyme, vanilla, hickory, molasses, almonds, baking soda, ginger, and terrapin. Thank heavens I can still have my terrapin.)

It’s probably not unfair to say that I put together this post as a coping mechanism. Fight fire with fire. You want a time loop? You may see the following post make its way to the front of the queue several times a year. While it’s of course not the best tack for me to close my eyes and block my ears to the latest article that forces a visceral reaction, it’s important to put things in context first.

This time around, I’m posting not because a new study just came out on red meat and mortality (although I haven’t checked my email in the past five minutes), but because we’re doing a series on this very topic of observational epidemiology.

Studying Studies: Part I – relative risk vs. absolute risk

Studying Studies: Part II – observational epidemiology

Studying Studies: Part III – the motivation for observational studies

I must admit, re-reading this post for the first time, I thought to myself, ‘Wow, Peter. Chill out…you really wrote that?’ Kinda like when I look at a picture of me from the 90’s. Dude, you wore that?

—P.A., January 2018

§

“For the greatest enemy of truth is very often not the lie—deliberate, contrived and dishonest—but the myth—persistent, persuasive, and unrealistic. Too often we hold fast to the clichés of our forebears. We subject all facts to a prefabricated set of interpretations. We enjoy the comfort of opinion without the discomfort of thought.”

– John F. Kennedy, Yale University commencement address (June 11, 1962)

I’m going to devote this post to a discussion on what I like to call the Scientific Weapon of Mass Destruction: observational epidemiology, at least for public health policy

I had always planned to write about this most important topic soon enough, but the recent study out of Harvard’s School of Public Health generated more than enough stories like this one such that I figured it was worth putting some of my other ideas on the back-burner, at least for a week. If you’ve been reading this blog at all you’ve hopefully figured out that I’m not writing it to get rich. What I’m trying to do is help people understand how to think about what they eat and why. I have my own ideas, shared by some, of what is “good” and what is “bad,” and you’ve probably noticed that I don’t eat like most people.

However, that’s not the real point I want to make. I want to help you become thinkers rather than followers, at least on the topic of health sciences. And that includes not being mindless followers of me or my ideas, of course. Being a critical thinker doesn’t mean you reject everything out there for the sake of being contrarian. It means you question everything out there. I failed to do this in medical school and residency. I mindlessly accepted what I was taught about nutrition without ever looking at the data myself.

Too often we cling to nice stories because they make us feel good, but we don’t ask the hard questions. You’ve had great success improving your health on a vegan diet? No animals have died at your expense. Great! But, why do you think it is you’ve improved your health on this diet? Is it because you stopped eating animal products? Perhaps. What else did you stop eating? How can we figure this out? If we don’t ask these questions, we end up making incorrect linkages between cause and effect. This is the sine qua non of bad science.

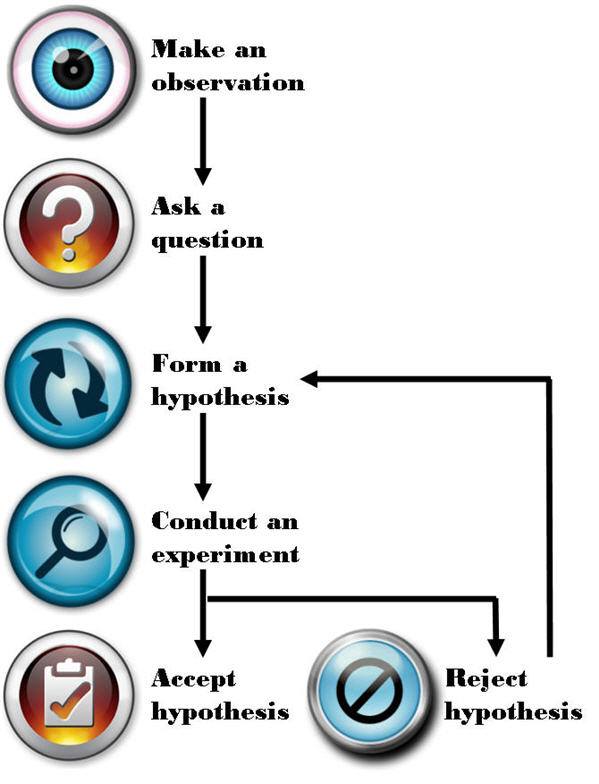

Most disciplines of science—such as physics, chemistry, and biology—use something called the Scientific Method to answer questions. A simple figure of this approach is shown below:

The figure is pretty self-explanatory, so let me get to the part that observational epidemiology inherently omits: “Conduct an experiment.” There is no shortage of observations, questions, or hypotheses in the world of epidemiology and public health—so we’re doing well on that front. It’s that pesky experiment part we’re getting hung up on. Without doing controlled experiments it is not possible to distinguish the relationship between cause and effect.

What is an experiment?

There are several types of experiments and they are not all equally effective at determining the cause and effect relationship. Climate scientists and social economists (like one of my favorites, Steven Levitt), for example, often carry out natural experiments. Why? Because the “laboratory” they study can’t actually be manipulated in a controlled setting. For example, when Levitt and his colleagues tried to figure out if swimming pools or guns were more dangerous to children—i.e., Was a child more likely to drown in a house with a swimming pool or be shot by a gun in a home with a gun?—they could only look at historical, or observational, data. They could not design an experiment to study this question prospectively and in a controlled manner.

How would one design such an experiment? In a “dream” world you would find, say, 100,000 families and you would split them into two groups—group 1 and group 2. Group 1 and 2 would be statistically identical in every way once divided. Because of the size of the population, any differences between them would cancel out (e.g., socioeconomic status, number of kids, parenting styles, geography). The 50,000 group 1 families would then have a swimming pool installed in their backyard and the 50,000 group 2 families would be given a gun to keep in their house.

For a period of time, say 5 years, the scientists would observe the differences in child death rates from these two causes (accidental drownings and gunshot wounds). At the conclusion, provided the study was powered appropriately, the scientists would know which was more hazardous to the life of a child, a home swimming pool or a home gun.

Unfortunately, questions like this (and the other questions studied by folks like Levitt) can’t be studied in a controlled way. Such studies are just impractical, if not impossible, to do.

Similarly, to rigorously study the anthropogenic CO2 – climate change hypothesis, for example, we would need another planet earth with the same number of humans, cows, lakes, oceans, and kittens that did NOT burn fossil fuels for 50 years. But, since these scenarios are never going to happen the folks that carry out natural experiments do the best they can to statistically manipulate data to separate as many confounding factors as possible in every effort to identify the relationship between cause and effect.

Enter the holy grail of experiments: the controlled experiment. In a controlled experiment, as the name suggests, the scientists have control over all variables between the groups (typically what we call a “control” group and a “treatment” group). Furthermore, they study subjects prospectively (rather than backward-looking, or retrospectively) while only changing one variable at a time. Even a well-designed experiment, if it changes too many variables (for example), prevents the investigator from making the important link: cause and effect.

Imagine a clinical experiment for patients with colon cancer. One group gets randomized to no treatment (“control group”). The other group gets randomized to a cocktail of 14 different chemotherapy drugs, plus radiation, plus surgery, plus hypnosis treatments, plus daily massages, plus daily ice cream sandwiches, plus daily visits from kittens (“treatment group”). A year later the treatment group has outlived the control group, and therefore the treatment has worked. But how do we know EXACTLY what led to the survival benefit? Was it 3 of the 14 drugs? The surgery? The kittens? We cannot know from this experiment. The only way to know for certain if a treatment works is to isolate it from all other variables and test it in a randomized prospective fashion.

As you can see, even doing a prospective controlled experiment is not enough, like the one above, if you fail to design the trial correctly. Technically, the fictitious experiment I describe above is not “wrong,” unless someone—for example, the scientist who carried out the trial or the newspapers who report on it—misrepresented it.

If the New York Times and CNN reported the following: New study proves that kittens cure cancer! would it be accurate? Not even close. Sadly, most folks would never read the actual study to understand why this bumper-sticker conclusion is categorically false. Sure, it is possible, based on this study, that kittens can cure cancer. But the scientists in this hypothetical study have wasted a lot of time and money if their goal was to determine if kittens could cure cancer. The best thing this study did was to reiterate a hypothesis. Nothing more. In other words, this experiment (even assuming it was done perfectly well from a technical standpoint) learned nothing other than the combination of 20 interventions was better than none because of an experimental design problem.

So what does all of this have to do with eating red meat?

In effect, I’ve already told you everything you need to know. I’m not actually going to spend any time dissecting the actual study published last week [March 12, 2012] that led to the screaming headlines about how red meat eaters are at greater risk of death from all causes (yes, “all causes,” according to this study) because it’s already been done a number of times by others this week alone. Three critical posts on this specific paper can be found here, here, and here.

I can’t suggest strongly enough that you read them all if you really want to understand the countless limitations of this particular study, and why its conclusion should be completely disregarded. If you want bonus points, read the paper first, see if you can understand the failures of it, then check your “answer” against these posts. As silly as this sounds, it’s actually the best way to know if you’ve really internalized what I’m describing.

Now, I know what you might be thinking: Oh, come on Peter, you’re just upset because this study says something completely opposite to what you want to hear.

Not so. In fact, I have the same criticism of similarly conducted studies that “find” conclusions I agree with. For example, on the exact same day the red meat study was published online (March 12, 2012) in the journal Archives of Internal Medicine, the same group of authors from Harvard’s School of Public Health published another paper in the journal Circulation. This second paper reported on the link between sweetened beverage consumption and heart disease, which “showed” that consumption of sugar-sweetened beverages increased the risk of heart disease in men.

I agree that sugar-sweetened beverages increase the risk of heart disease (not just in men, of course, but in women, too) along with a whole host of other diseases like cancer, diabetes, and Alzheimer’s disease. But, the point remains that this study does little to nothing to add to the body of evidence implicating sugar because it was not a controlled experiment.

This problem is actually rampant in nutrition

We’ve got studies “proving” that eating more grains protect men from colon cancer, that light-to-moderate alcohol consumption reduces the risk of stroke in women, and that low levels of polyunsaturated fats, including omega-6 fats, increase the risk of hip fractures in women. Are we to believe these studies? They sure sound authoritative, and the way the press reports on them it’s hard to argue, right?

How are these studies typically done?

Let’s talk nuts and bolts for a moment. I know some of you might already be zoning out with the detail, but if you want to understand why and how you’re being misled, you actually need to “double-click” (i.e., get one layer deeper) a bit. What the researchers do in these studies is follow a cohort of several tens of thousands of people—nurses, health care professionals, AARP members, etcetera—and they ask them what they eat with a food frequency questionnaire (FFQ) that is known to be almost fatally flawed in terms of its ability to accurately acquire data about what people really eat. Next, the researchers correlate disease states, morbidity, and maybe even mortality with food consumption, or at least reported food consumption (which is NOT the same thing). So, the end products are correlations—eating food X is associated with a gain of Y pounds, for example. Or eating red meat three times a week is associated with a 50% increase in the risk of death from falling pianos or heart attacks or cancer.

The catch, of course, is that correlations hold no causal information. Just because two events occur in step does not mean you can conclude one causes the other. Often in these articles you’ll hear people give the obligatory, “correlation doesn’t necessarily imply causality.” But saying that suggests a slight disconnect from the real issue. A more accurate statement is “correlation does not imply causality” or “correlations contain no causal information.”

So what explains the findings of studies like this (and virtually every single one of these studies coming out of massive health databases like Harvard’s)?

For starters, the foods associated with weight gain (or whichever disease they are studying) are also the foods associated with “bad” eating habits in the United States—french fries, sweets, red meat, processed meat, etc. Foods associated with weight loss are those associated with “good” eating habits—fruit, low-fat products, vegetables, etc. But, that’s not because these foods cause weight gain or loss, it’s because they are markers for the people who eat a certain way and live a certain way.

Think about who eats a lot of french fries (or a lot of processed meats). They are people who eat at fast food restaurants regularly (or in the case of processed meats, people who are more likely to be economically disadvantaged). So, eating lots of french fries, hamburgers, or processed meats is generally a marker for people with poor eating habits, which is often the case when people are less economically advantaged and less educated than people who buy their food fresh at the local farmer’s market or at Whole Foods. Furthermore, people eating more french fries and red meat are less health conscious in general (or they wouldn’t be eating french fries and red meat—remember, those of us who do eat red meat regularly are in the slim minority of health-conscious folks). These studies are rife with methodological flaws, and I could devote an entire Ph.D. thesis to this topic alone.

What should we do about this?

I’m guessing most of you—and most physicians and policy makers in the United States for that matter—are not actually browsing the American Journal of Epidemiology (where one can find studies like this all day long). But occasionally, like last week, the New York Times, Wall Street Journal, Washington Post, CBS, ABC, CNN, and everyone else gets wind of a study like the now-famous red meat study and comments in a misleading fashion. Health policy in the United States—and by extension much of the world—is driven by this. It’s not a conspiracy theory, by the way. It’s incompetence. Big difference. Keep Hanlon’s razor in mind—Never attribute to malice that which is adequately explained by stupidity.

This behavior, in my opinion, is unethical and the journalists who report on it (along with the scientists who stand by not correcting them) are doing humanity no favors.

I do not dispute that observational epidemiology has played a role in helping to elucidate “simple” linkages in health sciences (e.g., contaminated water and cholera or the linkage between scrotal cancer and chimney sweeps). However, multifaceted or highly complex pathways (e.g., cancer, heart disease) rarely pan out, unless the disease is virtually unheard of without the implicated cause. A great example of this is the elucidation of the linkage between small-cell lung cancer (SCLC) and smoking—we didn’t need a controlled experiment to link smoking to this particular variant of lung cancer because nothing else has ever been shown to even approach the rate of this type of lung cancer the way smoking has (reported relative risk of SCLC in current smokers of more than 1.5 packs of cigarettes a day was 111.3 and 108.6, respectively—over a 10,000% relative risk increase). As a result of this unique fact, Richard Doll and Austin Bradford Hill were able to design a clever observational analysis to correctly identify the cause and effect linkage between tobacco and lung cancer. But this sort of example is actually the exception and not the rule when it comes to epidemiology.

Whether it’s Ancel Keys’ observations and correlations of saturated fat intake and heart disease in his famous Seven Countries Study, which “proved” saturated fat is harmful or Denis Burkitt’s observation that people in Africa ate more fiber than people in England and had less colon cancer “proving” that eating fiber is the key to preventing colon cancer, virtually all of the nutritional dogma we are exposed to has not actually been scientifically tested. Perhaps the most influential current example of observational epidemiology [circa 2012] is the work of T. Colin Campbell, lead author of The China Study, which claims, “the science is clear” and “the results are unmistakable.” Really? Not if you define science the way scientists do. This doesn’t mean Colin Campbell is wrong (though I wholeheartedly believe he is wrong on about 75% of what he says based on current data). It means he has not done sufficient science to advance the discussion and hypotheses he espouses. If you want to read detailed critiques of this work, please look to Denise Minger and Michael Eades. I can only imagine the contribution to mankind Dr. Campbell could have given had he spent the same amount of time and money doing actual scientific experiments to elucidate the impact of dietary intake and chronic disease. [For example, Campbell would have designed a prospective study following subjects randomized to one of two different types of diets for 10 years: plant-based and animal-based, but with all other factors controlled for.] This is one irony of enormous observational epidemiology studies. Not only are they of little value, in a world of finite resources, they detract from real science being done.

Featured Image credit: Design by K. Pauley (CC BY-SA 2.0)

Using climate science as the example of how statistical “science” ought to be done is just about the worst possible example you could have come up with. They make the nutritional science folks look rigorous.

Funny…Seriously, though, that’s not what I said. I’m using Levitt’s work and climate change science as contrasts — they have to do statistically analysis. How they do it, I’m not expert enough to comment on. Point is, nutrition science should not be doing this.

Climate Science is as rigorous as it gets. It’s physics and chemistry. For elucidation check out:https://www.realclimate.org/index.php/archives/2007/05/start-here/ (website by actual climate scientists) and yes, Virginia their predictions are coming true with a bang. To understand the climate denial misinformation I highly recommend:

https://www.youtube.com/greenman3610 (Climate Denial Crock of the Week)

Anyway Peter I love your website!

Thanks very much, but guys (re: ALL READERS), let us NOT turn this discussion into a debate about climate change. Please? It’s missing the point.

Actually, I am a climate scientist (paleoclimatologist) and I will attest that I have days when looking at all the scarce data and *hard* chemistry and physics that I use is just crazy… I completely see the correlation between nutritional chemistry and climate science. For example, I am as anal as they get in the laboratory. I am a chemist. However, I use organisms that lived roughly 250 million years ago. They are mostly recrystallized. I have no idea how badly these shells are affected–we can use the chemistry we know to resolve for some of these chemical problems, but in the end, we have no idea about the biological factors that affected these organisms and there are so many oceanographic factors that we just have no clue about.. so we can make a good educated guess about what happened to change the isotopes or trace metal composition of these organisms, but in the end, we really just do not know the truth and we will never know the truth… whereas, with nutritional chemistry, at least we still have living organisms to study to try to figure out what happens on a cellular level!

hi Peter – great blog, but I have to take exception with one of your claims:

The catch, of course, is that correlations hold no (i.e., ZERO) causal information. Just because two events occur in step does not mean you can conclude one causes the other. Often in these articles you’ll hear people give the obligatory, “correlation doesn’t necessarily imply causality.” But saying that suggests a slight disconnect from the real issue. A more accurate statement is “correlation does not imply causality” or “correlations contain no causal information.”

This is incorrect. If a correlation exists, then there must be a cause present. A correlation between two variables implies unresolved causation; it may be that one of the variables is causing the other, or that some third variable is causing both, but if there is a non-spurious correlation then there must be a cause. In fact, one CAN infer causal structure from observational data under certain assumptions, using a set of causal inference methods developed in the machine learning literature. For more on these methods, see Pearl’s Causality, Spirtes et al.’s Causation, Prediction, and Search, or the TETRAD toolbox. Unfortunately, these methods seem to be rarely used in the epidemiology literature.

Russ, I’m not sure I agree with this, but I appreciate the point. Consider the following (true) example: if you look at the rise (seasonally adjusted) of atmospheric CO2 since about 1960 and compare it to transistor density on microprocessors, they are very strongly correlated rising nearly in lockstep. While there are causes of both, they two events are actually independent, though statistically correlated. The driver of transistor density and atmospheric CO2 are separate. Of course, some correlations ARE related and a cause, either between them or by an external 3rd variable, are at play. But looking at 2 correlated variables does let us draw any causal information.

I agree that there are several elegant statistical tools to try to sort things out, but to my point in the post, let’s reserve those for the questions that can’t be answered experimentally. To use observational epidemiology to solve nutritional problems is like rubbing two sticks together when you’ve got a Zippo lighter. Why bother?

Nice job. I get tired of telling my co-workers at the lunch table that red meat isn’t bad for you. It’s the myth or record so people think you’re a nut or a caveman and just want to eat a lot of steak if you argue the point. After reading Gary’s books and articles and your blog and others, I’m frightened about the poor quality science that is being dumped on the general public. In my work as an engineer I routinely design experiments and have to prove what I want to do is beneficial or at least does no harm. Luckily I have ways to do this that are rigorous and allow me to make informed decisions. If I let my personal biases get in the way, it could lead to decisions that would kill the product we sell and that wouldn’t be good for me or my company. I have never seen so much cherry picking of data in my whole life as an engineer as I have seen reading various “Studies” which prove Salt is bad, etc. It seems to me the researchers who spend their career saying red meat is bad are so invested in their pre-conceived conclusions that they cannot objectively design their experiments or draw the correct conclusions from their work. Unfortunately, poor health decisions can lead to shortened lives of people who followed the current conventional wisdom.

Dave, go forth and spread the word!

A bit off topic, but a study today suggests that aspirin may reduce cancer incidence

https://news.yahoo.com/studies-aspirin-day-keep-cancer-bay-000306036.html

However, no mention of past studies on aspirin-insulin sensitivity linkage:

https://www.usatoday.com/news/health/2001-08-30-aspirin.htm

Hows that for an epidemiological mash up.

Any thoughts?

Beyond my more than 3,000 word post?

I remember seeing this article also published by the Harvard School of Public Health shortly after the red meat article.

Hu EA, et al “White rice consumption and risk of type 2 diabetes: Meta-analysis and systematic review” BMJ 2012; DOI: 10.1136/bmj.e1454.

https://www.bmj.com/content/344/bmj.e1454

It’s also interesting how the results automatically lead the researchers toward whole grains instead of wondering about grains altogether.

HSPH is literally a *machine* churning out this sort of “stuff.”

I have taken more courses in statistics than I care to remember and one time in a graduate class we performed a regression analyses on random numbers and got some surprising results.(I’ve done my best to forget the stuff.) What strikes me about the statistics in nutritional studies is how small the correlations are and how little of the variance is accounted for — but you’d never know it from the media hype these studies get.

In a review of Gary’s GCBC the physicist Robert McCleod makes the following observation:

“As a physicist, if I get an correlation coefficient, R2 < 0.9997 in an experiment, I would consider that a poor result. A nutritional researcher working with human patients cannot even dream of achieving the degree of control or characterization I can, and their data are overloaded with spurious noise.

Researchers in the soft sciences typically do not have sufficient math skills to understand the statistical methods that are they are using to evaluate their data. I've lost track of how many times I've seen evaluations of the mean and standard deviation for distributions that are clearly not normal (also known as Gaussian). Don't even get me started on p-values. More importantly, very few medical studies attempt to test a single hypothesis. Far too many studies will compare apples to bananas, rather than apples to no apples, or they'll compare apples, oranges, and bananas to no fruit. Making conclusions from such messily designed experiments is rife with the potential for misinterpretation. Drug studies are often an exception."https://entropyproduction.blogspot.com/2009/02/all-medical-science-is-wrong-within-95.html

Epidemiology sometimes might be useful in generating hypotheses, but should never be used as a substitute for actual science. And one of the key things that's often missing is the notion of falsifiability — no matter how many time the lipid hypothesis, etc. have been struck down — it's never given the burial it deserves.

Thank you, Elizabeth. Very well said. I agree, also, with our point about lack of math skills. In fact, one of the more embarrassing moments for T. Colin Campbell, while defending his assertions from the China Study, stems from his inability to distinguish between univariate and multivariate regression. Imagine the higher order mistakes being made, if something this simple is being fouled up.

Drug studies are easier to do because they at least can use placebos. If you look at a proposed diet study to actually (attempt to) answer whether red meat is bad for you, no matter what you do, you’ll change two elements of diet. For instance, if you have a control group and group that eats no red meat (randomized into the two groups, of course), if calories remain the same in the group eating no meat, they have to make up those calories somehow. Are the differences in groups due to eating no red meat or replacing the red meat with something else (whatever that something else is)? But at least you can get closer to an answer than performed by epidemiological studies.

We can do MUCH better than this. And we will.

One interesting thing I noticed in the Harvard data, is that there’s a strong inverse correlation between how much red meat was eaten and cholesterol levels, despite a strong negative correlation between how much red meat was eaten and amount of exercise done.

So people who ate the most red meat, also did the least physical exercise, yet had the lowest incidence of high cholesterol.

I wonder why I didn’t see “Eating Red Meat Lowers Cholesterol Without Exercise” headlines 🙂

Great observation! I can only imagine what we could extract from these data if we had the raw data, instead of just summary data.

People, Peter gave us another great post.

Why is everyone going off on tangents? There are already enough people who will attack us for how we think about nutrition. Why “go after” one of the strongest and clearest voices on the web with tiny little nitpicking based on personal opinions about examples.

I’ve lost 100lbs in the year since I first read Taubes and started following this lifestyle. Yet my wife still hates how I eat even though I’m eating more green veggies than I ever ate before. She can’t wrap her head around so much meat being healhy because of the all the BS and dogma that the media and government keeps generating.

Thank you for another great and informative post Peter, now if only I could get my wife to read it.

Consider having your wife watch “Fat Heads” on Hulu.com or Netflix.com. Simply enlightening documentary that has intelligent information and is entertaining.

My parents watched it too, and they were shocked with the information we’ve never been told.

Brian.

I can relate. My wife was wondering why I had turned to eating so much fat compared to what I would normally. In the wash up after she read my copy of Why We Get Fat, I made the point that she forgot about what I said when I first started this dietary change, this was about eliminating carbs, not eating more fat. It is easy to notice the consumption of fat given its current public stigma. It’s all in the evidence, and in your case, its dropped off to the tune of 100 lbs.

I agree. Some of us are looking for real solutions, and if we want to read about the environment, we can go elsewhere. I started losing weight seven years ago, and I’m still 100 pounds down, but i have 60 more to go and it seems almost impossible to reach my ultimate goals. There have been times I have devoted ridiculous amounts of time to exercise and spent tens of thousands of dollars on trainers. So when Peter says exercise doesn’t provide the answers; I can relate. I still wouldn’t trade one hour of exercise for one hour of living with the pain of morbid obesity. I’ve been reading this blog for about 10 days now, and have started trying to follow it. I have a few reservations. While I appreciate the scientific approach, it’s sometimes difficult to follow for a simple mind such as mine. I look for the practical applications, which is why I return to the post about what the good doctor eats. I’m currently devouring as much information as I can find. Today, it just felt really weird when I resorted to eating salami and cheese. I started to re-read because “surely” i’m not understanding something. I am determined. I weighed 345 at my maximum weight, and I can personally attest that living to be healthy is the only way to live.

Peter,

Great post. I agree fully with your approach: we should all be critical thinkers and not merely problem solvers, or passive accepters of dogma. Part of the problem is the modern system which has introduced so much complexity that we as a society have come to rely on so-called experts who are under their own social and ideological pressures that influence their work. All segments of academia and other professional schemas suffer from this.

Right now I am reading with some amazement a couple articles in the NYT related to the new Nestle book. I am trying to accept it with an open mind, but I am finding that premise difficult to swallow. In short, I’m shocked by how strong the “Calories as a thermodynamic calculus on the body” dogma continues to be accepted.

Keep up the good work on the blog. I look forward to your postings.

Thanks for your support. I struggle to read this stuff, too, despite trying to keep an open mind. When you really believe the world is round, it gets tough to listen to the masses drone on about a flat world.

To really nail it home, observational epidemiology is exactly as it sounds – observational. Going back to your diagram of the scientific process, everything underneath the eyeball is ignored. At best, it’s a tool that helps you sift through obviously worthless observations so that experiments can later be designed and executed on the potentially worthwhile observations. The only notable exceptions are when P values are insane, like P <= 0.00001.

And a minor nitpick: your chart leaves out the community process of science, such as peer review, duplication by other scientists, and verification via experiments designed to explore any weak spots left from another study. Here's an interesting flowchart from Understanding Science: https://undsci.berkeley.edu/flowchart_noninteractive.php

Peter,

I know nothing about microprocessor manufacture per se, but following up your objection to Russ Poldrack’s comment: are you sure that the manufacture of microprocessors does not produce atmospheric CO2? And while the density of transistors on microprocessors could be independent of the CO2 produced, the increasing density could also contribute to an increase in CO2, for all I know.

I’m not convinced by your example. But perhaps it’s too pedantic of me. The larger point made by both you and Russ Poldrack, I understand: until we do a proper experiment and get a positive result (or eliminate all negatives), we simply don’t know what causes a correlation (although we know what causes us to see the correlation!).

One other thing: there’s a post about this study by “Health Correlator” Ned Kock, an academic statistician, that takes a 180-degree different view. He looks at the study’s published data and finds a positive correlation between increased meat consumption and decreased mortality! That tells me that the larger problem has to do with statistical interpretation. It’s worth a read. See: https://healthcorrelator.blogspot.com/

Statistical interpretation? Forget it! How do we know we’re not fooling ourselves?

(I like the comment somewhere in one of the critiques you mention: red meat only kills you if it is stronger and faster than you, has an appetite, and eats red meat.)

Cheers,

Patrick

Could be, but pretty unlikely, certainly as the *primary* driver. But you draw the right conclusion — let’s do actual science! I have seen the other review you mention, but have not been able to scrub it yet. My point would still stand, though.

Don’t you guys know that it is the shrinking number of pirates that is at the root of global warming? The correlation is clear!

Very informative post! There’s a lot here that will help me in explaining these concepts to others.

I thought I was really getting through to one of my scientist friends the other day when he agreed that all these studies go through only 3/5 of the scientific process – but he still fervently believes that saturated fat causes heart disease because that’s the consensus.

Just point him in the direction of Ancel Keys and the Seven Country Study (along with a few other nuggets of bad science I hope to write about soon).

I recently finished Good Calories Bad Calories and have turned away from the “dark side” forever. I found your blog last month through Gary — love your writing.

When I saw the news about this study last week I knew all you guys would be turning out great posts to refute it, and I haven’t been disappointed.

Although this story was covered on a number of media sites, the only one I read which provided a caveat was USA Today. To their credit, their account included this paragraph:

“This new study provides further compelling evidence that high amounts of red meat may boost the risk of premature death,” said the study’s lead author, An Pan of the Harvard School of Public Health. But, he added, this type of study shows association, which doesn’t necessarily mean causation.”

Of course, the use of that pesky “necessarily” renders this statement next to useless.

Don’t give them TOO much credit. The obligatory caveat is just a convenient way to continue convincing people that it’s a good idea to use sun dials for telling the time when quartz watches have already been invented. Very convenient when you sell sun dials.

Dr. Attia,

Speaking of journalism and science, I came across these guys a couple of days ago:

https://www.healthnewsreview.org/

Even though I frequently engage in the futility of trying to change other people’s minds, I think Max Planck had it right:

“A new scientific truth does not triumph by convincing its opponents and making them see the light, but rather because its opponents eventually die, and a new generation grows up that is familiar with it.”

I think we’re in this fight for the long haul. Nobody likes to believe they were wrong or were fooled, the low fat crowd won’t give up easily. Changing minds occurs one mind at a time.

Bob, this is also one of my favorite quotes.

I am a climate change scientist–I study climate change from hundreds of millions of years ago to recent time on many different timescales. There are so many similarities between climate science and nutrition science and sometimes it is very frustrating. For example, it seems in general that people with no or limited knowledge of science tend to form the hardest opinions about what is the best science, and that is never based in any type of reality. People like to believe what is easiest to believe. Carbohydrates make for great tasting food and it seems as though it is now a major staple in many cultures. If you tell people that carbohydrates will kill them prematurely, and reduce the quality of the lives they live, they will immediately go on the defense–how will children in India survive without rice and grains? If we eat too much meat, won’t that reduce the availability of grains produced as aid packages? The answer is no, not really. Energy companies rely on the addiction people and societies have developed to carbon based fuels, so if we change our infrastructure to rely upon more solar or hydro based fuels, there may be chaos in the markets. As a scientist, I have no interest in the political side of the argument. I am a human and, as selfish as it may sound, I care most about my own survival, and also as a thinker, about the truth. What does it really take to create a healthy society? Is it *true* that fossil fuels will lead to unstable global heating? Is it true that eating a diet rich in carbohydrates will lead to an unhealthy and *expensive* society with greater medical costs? If today I read something that shows a clear answer to this question and it shows a scientific experiment that the composition of red meat alone (and, was the beef grass fed or grain fed? What was the nutrient composition of the red meat?), reacts with the human body in a way that directly leads to adipose fat storage, inflammation that causes heart disease and cardiac arrest, insulinemia/metabolic syndrome, etc., then I will definitely requestion what I have read about carbohydrates doing all of the above. However, all of the clues that scientists have put together seem to show, at least indirectly, that carbohydrates are the culprit, and every year we have more and more evidence of just that. I just wish the news media that caters to the general/non-scientist public would get with it, but of course it is more sensationalist to publish what caters to the general non-scientist public.

Samantha, I also work in the field of climate change, not as a scientist although I did spend a month or two nearly 30 years ago attempting to reconstruct past climates based on fossil foraminifera when I was at university. My background is environmental science and like Gary Taubes I became a science journalist; I now work mainly in the area of climate change communication.

To me, the big parallel between climate science and nutritional science is that in both areas I see vigorous challenges to the “consensus” view (I put quotes around consensus because true consensus requires 100% agreement, and that’s very rare in science; there is no consensus on climate change but there is a majority view). These challenges are a vital part of the process of science: we need skeptics to question the accepted wisdom. So far, most of the arguments raised by skeptics on climate change have served ultimately to strengthen confidence in the “consensus” view, because the great bulk of arguments put forward by the skeptics have been shown to be wrong or at least on very weak ground. That doesn’t mean the science is settled: science isn’t done by vote, and one person who’s right is worth 10,000 who are wrong. The history of science is full of good examples of the consensus view being overturned. But with climate change there is so much evidence emerging from so many different quarters (with models playing only a relatively small role) that it seems unlikely the basic hypothesis can be overturned.

While “consensus” is irrelevant to science, it does matter from a policy perspective, because there is no responsible justification for basing policy on the views of a minority of skeptics. I think this is what we’re seeing in the field of nutrition as well. The “Central Dogma” remains tenacious in guiding public policy and mainstream medical advice, not because nobody is aware of Gary Taubes and the evidence he and others are bringing to light, but because the tide of expert opinion has not yet shifted in that direction.

Why hasn’t the tide shifted? It could be due to inertia, of the difficulty in shaking deeply held beliefs, the unwillingness to look back at one’s career and accept that you wasted it barking up the wrong tree…there are lots of potential reasons. But I think the caution is appropriate, because in order to effect a shift like this you need extremely convincing evidence. And based on what I’ve been reading, the mainstream experts in the field haven’t been convinced yet.

I don’t think nutritional advice in America is going to be shifted by gathering thousands of supporters of Gary Taubes and Peter Attia to raise awareness of their findings. It’s going to require publishing convincing evidence, opening it for a thorough probing to explore any weaknesses, and addressing those weaknesses.

Plate tectonics and other theories and principles that were once minority views and now mainstream demonstrate that central dogmas can be changed. It just takes time to amass enough convincing evidence.

Brad, thanks for providing the quote of the day: “That doesn’t mean the science is settled: science isn’t done by vote, and one person who’s right is worth 10,000 who are wrong.”

Thanks for this post, Samantha. As one of the lay people with zero understanding of science, I rely on scientists like you, and when I read confirmation of what SEEMS logical to me, it helps.

“Never attribute to malice that which is adequately explained by stupidity.”

I would argue that the scientists who do these studies are neither stupid nor malicious. I believe they are motivated more by fear of having their funding pulled. If you spend a bunch of money on a study and don’t find anything useful you’re not going to get more funding. I used to work in science and left because I was so disenchanted by what I saw. Many scientists will publish anything they can that shows a shred of evidence that supports the original hypothesis for which they were funded. This is a sad state of affairs in the scientific community.

On the other hand, conducting controlled experiments on diet is hard. If you were assigned to the 100 ounces of soda a day group and starting packing on pounds would you really stay in the study? There are ethical implications of asking people to consume things they think might be bad for them. This is other reason we don’t assign people swimming pools and guns to see if it will kill their kids. Who would volunteer for that study?

The other confounding aspect here is that people want easy answers. When no one understood any of the causes of heart disease, doctors were watching their patients die and didn’t know what to tell them. Doctors want to help their patients! That’s their job. Enter Ancel Keys who tells them, look… it’s the fat and the cholesterol! Then doctors had something they could do, and they felt like they were helping.

The current system of medicine and science is very broken. Not so much out of malice and stupidity, but out of self preservation and the desire to come up with answers, even if they are not correct.

“Never attribute to malice that which is adequately explained by stupidity.” The quote doesn’t say whose stupidity. 🙂

I have to leave something to your imagination, don’t I?

Great post (as usual)!

A friend sent me a study suggesting that circulating endotoxin levels spike in diabetics who have eaten a high fat meal (I post this piece of science at the end of this message). I want to suggest that there are other forms of bad science out there, which rely solely on surrogate markers (even high quality randomized trial data, although this was not). A pre-post study design is piss poor epidemiology, even if it is experimental. Where is the high carb control group? And what does an overnight fast plus a single meal composed of *anything* tell us about long-term human health? Absolutely nothing. I’m not even sure what the relevance of endotoxin levels are, although I know that endotoxin has been used as a therapy to cure some chronic infectious diseases for decades, particularly in Europe.

High Fat Intake Leads to Acute Postprandial Exposure to Circulating Endotoxin in Type 2 Diabetic Subjects

Alison L. Harte, PHD1, Madhusudhan C. Varma, MRCP1, Gyanendra Tripathi, PHD1, Kirsty C. McGee, PHD1, Nasser M. Al-Daghri, PHD2, Omar S. Al-Attas, PHD2, Shaun Sabico, MD2, Joseph P. O’Hare, MD1, Antonio Ceriello, MD3, Ponnusamy Saravanan, PHD4, Sudhesh Kumar, MD1 and Philip G. McTernan, PHD1?

+ Author Affiliations

1Division of Metabolic and Vascular Health, University of Warwick, Coventry, U.K.

2College of Science, Biomarkers Research Programme and Center of Excellence in Biotechnology Research, King Saud University, Riyadh, Saudi Arabia

3Insititut d’Investigacions Biomèdiques August Pi i Sunyer (IDIBAPS) and Centro de Investigación Biomédica en Red de Diabetes y Enfermedades Metabólicas Asociadas (CIBERDEM), Barcelona, Spain

4University of Warwick and George Eliot Hospital, Clinical Sciences Research Laboratories, Warwick Medical School (University Hospital Coventry and Warwickshire Campus) Coventry, U.K.

Corresponding author: Philip McTernan, [email protected].

Abstract

OBJECTIVE To evaluate the changes in circulating endotoxin after a high–saturated fat meal to determine whether these effects depend on metabolic disease state.

RESEARCH DESIGN AND METHODS Subjects (n = 54) were given a high-fat meal (75 g fat, 5 g carbohydrate, 6 g protein) after an overnight fast (nonobese control [NOC]: age 39.9 ± 11.8 years [mean ± SD], BMI 24.9 ± 3.2 kg/m2, n = 9; obese: age 43.8 ± 9.5 years, BMI 33.3 ± 2.5 kg/m2, n = 15; impaired glucose tolerance [IGT]: age 41.7 ± 11.3 years, BMI 32.0 ± 4.5 kg/m2, n = 12; type 2 diabetes: age 45.4 ± 10.1 years, BMI 30.3 ± 4.5 kg/m2, n = 18]. Blood was collected before (0 h) and after the meal (1–4 h) for analysis.

RESULTS Baseline endotoxin was significantly higher in the type 2 diabetic and IGT subjects than in NOC subjects, with baseline circulating endotoxin levels 60.6% higher in type 2 diabetic subjects than in NOC subjects (P < 0.05). Ingestion of a high-fat meal led to a significant rise in endotoxin levels in type 2 diabetic, IGT, and obese subjects over the 4-h time period (P < 0.05). These findings also showed that, at 4 h after a meal, type 2 diabetic subjects had higher circulating endotoxin levels (125.4%?) than NOC subjects (P < 0.05).

CONCLUSIONS These studies have highlighted that exposure to a high-fat meal elevates circulating endotoxin irrespective of metabolic state, as early as 1 h after a meal. However, this increase is substantial in IGT and type 2 diabetic subjects, suggesting that metabolic endotoxinemia is exacerbated after high-fat intake. In conclusion, our data suggest that, in a compromised metabolic state such as type 2 diabetes, a continual snacking routine will cumulatively promote their condition more rapidly than in other individuals because of the greater exposure to endotoxin.

Received August 18, 2011.

Accepted November 4, 2011.

© 2012 by the American Diabetes Association.

Take a look at “Design of Experiments” literature to see that it is actually more mathematically efficient to vary more than one variable at a time. It is unfortunate that most engineering schools don’t teach this subject to their students. I had to learn about this at work from a brilliant statistician.

Excellent post Peter and I am glad you pointed out the smoking example. Sometimes associations do count; afterall, where there is smoke there is fire! Sadly, the nutrition/diet studies never give rise to a statistically significant level of association. As to the unrelated topic of the environment, I can only say that the “talking heads” went to town with the destruction of the ocean,etc, after the BP oil spill, and if they had used there heads-thinking- they would have remembered that the ocean is amazing with its self-cleaning properties which have been demonstrated in past spills.

Your opening quote of Kennedy said it all. Excellent