I know I’m not alone in my chronic frustration with nutritional research. The unfathomable volume of conflicting results and poorly-designed studies can often make it seem that we’re moving backwards, knowing less when a study concludes than we did when it began, especially when it comes to the study of nutrition and dementia prevention. Certain macroscopic points are clear – diets that promote energy balance and insulin sensitivity are unequivocally better for cognitive health than diets that fail in these goals – yet research to date has yielded precious few “answers” with regard to specific nutrients and even overall diet patterns that might be beneficial or harmful (assuming equivalent effects on energy balance and insulin sensitivity). In other words, once you correct for a diet that keeps you from being overweight or insulin resistant, does it matter at all what makes up that diet?

Observational studies have pointed to a number of dietary components as being neuroprotective, but as we saw with the recent MIND diet trial, controlled clinical trials have consistently failed to support conclusive causal links. As I’ve repeated countless times, randomized controlled trials (RCTs) generally provide far more reliable data than epidemiology studies, but it certainly doesn’t seem possible that diet would have no effect on cognition and cognitive decline, as most nutrition RCTs appear to suggest. So what should we make of this discrepancy? And how can we hope to resolve it in the future?

Single Nutrients vs. Whole Diets

Despite their many shortcomings, nutritional epidemiology studies are useful for forming hypotheses that can then be examined more rigorously through interventional studies, but not all research questions are easily approached with RCTs. While epidemiology often seeks to identify correlations between clinically relevant parameters and whole dietary patterns, most nutrition RCTs for dementia prevention have examined the effects of altering intake of just a single nutrient. This makes sense when we consider that intake of single nutrients is easy to manipulate (e.g., through supplementation) in a precise and consistent manner across a large study cohort at relatively low cost. Further, designing an appropriate control group is simple, usually involving treatment with a placebo.

But an intervention based on a single nutrient might not reveal the whole truth about that nutrient’s effects. Let’s consider vitamin E as an example. Though observational studies have found associations between vitamin E levels and cognitive health, clinical trials testing whether vitamin E supplementation reduces dementia risk or improves cognitive trajectories have shown no effect. While it could be true that there is indeed no causal relationship between vitamin E and cognition, another possibility is that vitamin E supplementation acts synergistically with one or more other dietary components and is thus only neuroprotective if all are consumed in combination. Such an effect might be detected in whole-diet studies but would likely be missed under the more limited scope of single-nutrient studies.

Why haven’t RCTs tested whole diet patterns?

Whole-diet interventions altering consumption of multiple foods may have different or greater effects on cognition than isolated nutrients, but implementing a whole-diet intervention in a clinical trial is no easy task. Such a trial needs to be free-living, meaning participants would need to feed themselves for many years (assuming we are talking about the study of clinical outcomes such as onset of dementia). If the goal was to make this as rigorous as possible, researchers would need to carefully oversee – if not supply – the full diet of every participant for years, an expensive and logistically impossible endeavor. Properly controlling such studies is also difficult – how can you design a control for the myriad variables that make up the whole dietary pattern?

Additionally, the way nutrients are processed varies among individuals and depends on a number of factors including genetics, metabolism, microbiome, culture, and environment. Applying an intervention in one population may have different effects than applying it in another. So how do we even know where to start looking?

And finally, even if such a study were successfully carried out and demonstrated a significant effect, how would we then know which of the many components of the dietary intervention are responsible for the outcome? Most diets include both “healthy” and “unhealthy” components, so considering the diet as a whole can obscure our ability to use research results to determine a truly optimal diet. For example, if a Mediterranean diet were found to promote cognitive health, this result alone could tell us nothing about whether the effect might be due to increased intake of fish and omega-3 fatty acids versus intake of baklava and honey, or all of the wine for that matter.

So how can we move forward?

Last year, I highlighted an excellent review by Dr. David Allison and his colleagues on the many shortcomings of nutritional epidemiology and suggestions for ways to improve the field. But since then, a report has been published by the Nutrition for Dementia Prevention Working Group. In it, authors Yassine et al., a previous guest on The Drive, offer strategies for overcoming the gap between observational and interventional nutrition studies by adjusting our approach to both of these modalities and using a combination of the two to tackle research questions more effectively. So let’s take a closer look at a few key points and how they might improve the field.

#1: Network Analysis

Yassine et al. propose the use of network analysis in observational studies as a tool for providing a more comprehensive understanding of the effects of nutrition on health. Network analysis, often used in fields like computer science and sociology, is a technique that examines relationships between different entities, whether they are computers in a network, people in a society, or, in this case, components of our diet. So, what does this look like in the realm of nutritional studies?

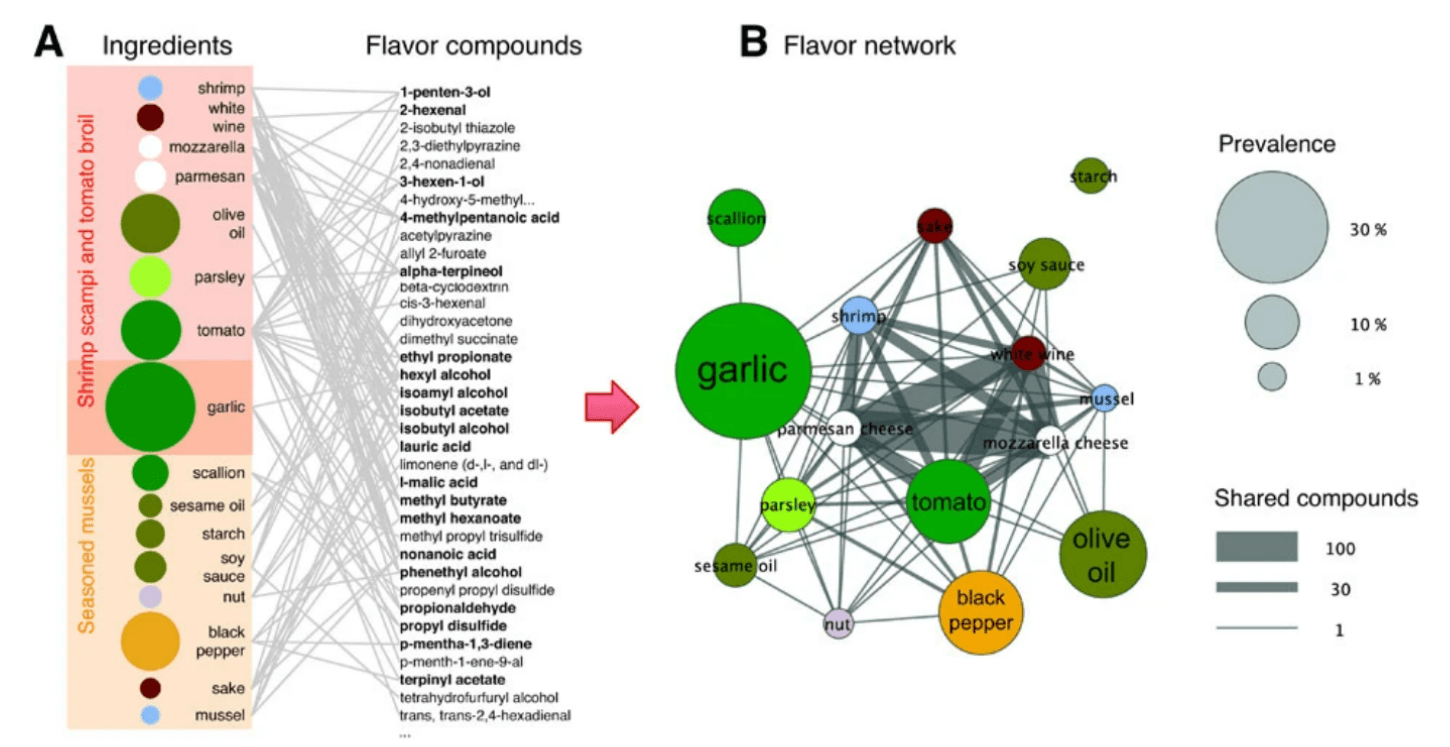

Picture a map in which each city represents a different food item. The roads connecting these cities represent the relationships between different foods, showing which foods are often consumed together, on the level of individual meals or whole diets. In other words, rather than regarding olive oil, tomatoes, wine, and pasta as four entirely separate and unrelated foods, network analysis allows us to take into account the fact that these four are often consumed as part of the same overall dietary patterns. Importantly, network analyses can also highlight the overlap in the nutrient profiles of diets varying in whole-food composition (e.g., some individuals may eat avocados and others olive oil, but both are then consuming oleic acid). Ultimately, these analyses make it possible to determine which combinations or families of nutrients or foods might underlie a given effect despite inevitable variability in the specific foods consumed. Similar network analysis techniques have often been applied for flavor profile analysis, as in the figure below that we’ve included to illustrate how network analysis works.

Figure: A food network analysis based on flavor profiles. (A) Ingredients in two recipes are shown linked to the flavor compounds present within them (right column), forming a network. Compounds shared by multiple ingredients are boldfaced. (B) The network from panel A is projected to form a flavor network in which each node is an ingredient. Nodes sharing at least one flavor compound are linked, with the thickness of each link representing the number of flavor compounds two ingredients share. Circle size represents the prevalence of the ingredients in recipes. Source: Ahn et al., Nature Scientific Reports, 2011.

In the context of diet studies, network analysis was applied in the Three-City Study (3C Study) – a population-based longitudinal study relating vascular diseases and dementia in subjects over the age of 65 – to show that those who ultimately developed dementia consumed a lesser variety of foods than those who did not. Additionally, the diets of those who developed dementia primarily centered around a combination of less healthy items, such as cured meats, though the specific foods may have differed across individuals. Though food network analysis still cannot eliminate the possible effects of healthy user bias or many other confounders, it can aid in elucidating where the patterns of food consumption – as opposed to a specific nutrient or food item – are relevant to a specific outcome.

#2: Biomarkers to Inform Trial Design

A major challenge to designing clinical trials is selecting the most effective timing, dosing, and duration of an intervention, as well as determining the relevant population of interest. Yassine et al. propose the use of biomarkers – quantitative biological metrics which reflect disease progression, responses to a given nutrient, or other relevant phenomena – to better inform these decisions. Biomarker tools can be used to aid in the analysis of findings and the identification of significant differences between subgroups (for instance, based on age stratification or health status). In turn, this information can guide future clinical trial design.

As an example, let’s consider the case of omega-3 fatty acids. Several clinical trials demonstrated no significant association between supplementation with omega-3 fatty acids and development of dementia. A potential explanation, however, might be that omega-3 supplementation is more beneficial in people with low baseline levels, which has been shown to be the case. These findings thus suggest that omega-3 fatty acids have no effect when present above a certain threshold value, whereas those below this threshold may benefit. In other words, these data help to narrow in on the population of interest (i.e., omega-3 fatty acid-deficient participants), and indeed, studies specifically in this population have revealed a significant impact on dementia risk.

#3: Sub-Analyses Using “-omics” Data

Considering the variety in individual responses to different nutrients, fully understanding their effects requires more “personalized” studies focused on targeted risk subgroups. As described above, biomarker data is one method for identifying meaningful differences between groups, but another strategy is to take advantage of existing “-omics” data, such as genomics and metabolomics, which represent comprehensive approaches for analysis of complete genetic or metabolic profiles, respectively. Using these methods, researchers could turn to results from genome-wide association studies (GWAS) to learn which genetic variants are known to be associated with dementia risk and conduct sub-analyses of clinical trial data by differentiating between participants with or without certain risk alleles. By applying more detailed sub-analyses, we obtain a fuller understanding of how nutrients “behave” on a molecular level in different individuals, which would open doors to the future of precision medicine.

This approach can likewise be applied for sub-grouping participants based on epigenetics, transcriptomics, or microbiome profile, as in a study cited by Yassine et al. showing that a Mediterranean diet had a significant effect on cardiovascular risk only among participants with a particular species of commensal bacteria.

However, sub-analyses, by definition, come with a reduction in sample size compared to the primary study, which diminishes statistical power. Combining data (with careful scrutiny to ensure the comparability of the studies) from multiple studies through meta-analysis is one way to increase sample size, and therefore, statistical power, but sub-analyses should also be taken into account during the planning stages of an individual study. Anticipating sub-analyses during the initial design stages allows the sample size for the full cohort and respective subgroups to be calculated to ensure sufficient power for both primary analysis and sub-analyses. Indeed, deriving reliable conclusions from such sub-analyses requires that they be pre-specified (thus avoiding the “Texas sharpshooter fallacy”), so planning appropriate cohort sizes and sub-groups should be part of any study design phase. That being said, even unplanned and/or underpowered sub-analyses (so-called “fishing expeditions”) can still be informative as a means of generating hypotheses to be tested in future trials.

Bottom Line

Most existing studies about nutritional impact on the risk of dementia development come from observational data. The fact that virtually all of the conclusions from these studies have failed to hold up in more controlled clinical trials data is surely ringing the bell on the urgent need to reassess how we think about nutrition science research. The review by Yassine et al. proposes several solutions to help the field move forward, but as the authors point out, no single study design will ever be sufficient to capture the full picture of nutritional impacts on cognitive health.

We will need to use a combination of population-level and personalized studies and of observational and interventional studies. While large-scale studies, most of which tend to be observational, play an important role in highlighting patterns and guiding the formation of hypotheses-driven research and clinical trial development, smaller studies with subgroup analyses provide insights into the critical variables impacting effects across individuals. Interventional clinical trials offer reliable data on single nutrient effects, but by employing network analyses, biomarkers, and “-omics” data, we might generate more reliable epidemiological and clinical trial data to aid in drawing conclusions on the scale of whole diets.

For a list of all previous weekly emails, click here.