Want to catch up with other articles from this series?

- Studying Studies: Part I – relative risk vs. absolute risk

- Studying Studies: Part II – observational epidemiology

- Studying Studies: Part III – the motivation for observational studies

- Studying Studies: Part IV – randomization and confounding

- Studying Studies: Part V – power and significance

- Ask Me Anything #30: How to Read and Understand Scientific Studies

“Experimental ideas are by no means innate,” writes Claude Bernard, the renowned French physiologist in An Introduction to the Study of Experimental Medicine.1This should be considered required reading for people getting into science. He continues:

To have our first idea of things, we must see those things; to have an idea about a natural phenomenon, we must, first of all, observe it. The mind of man cannot conceive an effect without a cause, so that the sight of a phenomenon always awakens an idea of causation. All human knowledge is limited to working back from observed effects to their cause. Following an observation, an idea connected with the cause of the observed phenomenon presents itself to the mind. We then inject this anticipative idea into a train of reasoning, by virtue of which we make experiments to control it.

§

Proviso: This post is a part of a series on how to go about making sense of the data, conjectures, and the many tools that investigators use to translate information. If you’re a follower of this site (or its predecessor), you almost assuredly get the idea that correlation does not necessarily imply causation, and that relative risk is not the same thing as absolute risk.

However, there is so much more to the ways in which we might fool ourselves: whether it’s reading the latest headline, poring over a publication of a clinical trial, or just understanding what “statistical significance” truly means (and, more importantly, doesn’t mean), the literature and media abound in cognitive tripwires. We include ourselves—big time—when we write about fooling ourselves.

One of the selfish reasons why we’re devoting what might seem like an unconscionable amount of time on a blog (and this proviso) is to try and build the strongest knowledge base we can to minimize the number of occurrences where we make mistakes. Hippocrates was probably on to something in his plea, “first, do no harm,” but if taken literally, the best doctor is no doctor at all, or one that never intervenes. (We can’t help but smirk at this suggestion ourselves.) Sir William Osler couldn’t hold a candle to your lamppost when it comes to your choice for a primary care physician according to this logic. The reality is we have to make decisions based on assessing risk.2There’s a financial measurement that we equate this to: risk-adjusted return on capital, or RAROC (pronounced “ray-rock”), for short. The risk of harm from an intervention is meaningless in a vacuum without understanding the risk of not intervening. But much more on that in the coming months.

You may get the sense that we’re not the biggest fans of observational epidemiology. That might be a little misguided (although we’re the ones at fault for giving this impression). There are places where epidemiology can be helpful. Again, if you’re reading this, you likely know that a success of epidemiology is linking tobacco smoking to lung cancer. But we may take our appreciation for epidemiology a step beyond cases where the data fit snugly into Bradford-Hill’s “nine different viewpoints from all of which we should study association before we cry causation.”3Many, including Wikipedia, refer to this as the “Bradford Hill criteria.” It should be noted that Bradford Hill never referred to these nine viewpoints as criteria in his essay. He writes, “None of my nine viewpoints can bring indisputable evidence for or against the cause-and-effect hypothesis and none can be required as a sine qua non. What they can do, with greater or less strength, is to help us to make up our minds on the fundamental question – is there any other way of explaining the set of facts before us, is there any other answer equally, or more, likely than cause and effect?”

We like to quote Charlie Munger—who likes to quote the mathematician Carl Jacobi: Invert. Always invert. The solution of many hard problems can often be clarified by re-expressing them in inverse form.

- Correlation implies causation?

- Lack of a correlation implies lack of causation?

In this case, perhaps the solution is inverted. Solution is obviously too strong a word, but we’re likely to analyze observational epidemiological studies (how much can we bag on epidemiologists when we’re armchair epidemiologists ourselves?) and discern something useful from what they don’t imply. What might come as a surprise is that we may often come to the opposite conclusion of the investigators. If the world, or at least the World Health Organization (WHO) for that matter,4More specifically, the International Agency for Research on Cancer, or IARC for short. is convinced that processed meat is a Group 1 carcinogen—in the same category as arsenic, “mustard gas,” plutonium, and asbestos—based on “sufficient evidence,” for example: the fact that the WHO looked at 800 epidemiological studies (more on this in the series) and found an association (an estimated 18% increase for an additional 50 gram portion of processed meat eaten daily), one might actually come away with the opposite conclusion. Red meat or processed meat doesn’t have to prevent cancer in order for it to not cause cancer. Lack of a correlation doesn’t have to mean zero correlation. The same can be said for many of the myriad foods that are associated with cancer. Hell, just look what happened when John Ioannidis and Jonathan Schoenfeld picked 50 ingredients at random out of a cookbook and determined if each was associated with cancer.

If we’re extremely careful, we can learn more about the world by looking at good observational studies. But the level of care we can apply is limited by our knowledge of the epidemiological world and understanding its strengths and limitations, amongst other components. We also don’t know if we’re looking at good studies in the first place if we don’t study all we can about what makes a good and bad study.

This is really the point of this exercise. We want to be “smarter,” or, more specifically, we want to have better discernment when it comes to science. We can hear Jacobi needling us: perhaps more accurately, we want to be less stupid. Like Neil deGrasse Tyson, we’re driven by two main philosophies: know more today about the world than we knew yesterday and lessen the suffering of others. It’s hard not to imagine that most people get into the field of epidemiology and public health for these very same reasons when we stop to think about it. When we don’t stop and think (which is too often the case), we lose sight of this.

§

The origins of epidemiology

Epidemiology is the branch of medicine that deals with the incidence, distribution and possible control of diseases, and other factors relating to health. The word epidemiology comes from Greek: epidēmia, meaning prevalence of disease.5And -logy is probably best described as “the study of,” when referring to the sciences. If we break the word down to its component parts, epi “upon”, demos “people”, logos “study”, epidemiology is the study of what is upon the people. Epidemiology is the study of epidemics. Investigators in this field look for associations of disease and health-related conditions and try to determine their cause.

It is Hippocrates who reportedly wrote the earliest on the topic of a systematic attempt to relate the occurrence of disease to environmental factors.6These relationships are examined in three books attributed to Hippocrates: Epidemic I, Epidemic III, and On Airs, Waters, and Places. Take heed: “Of the roughly 70 works in the ‘Hippocratic Collection,’” notes Harvard University Press, “many are not by Hippocrates; even the famous oath may not be his.” It is also said that he introduced the terms endemic and epidemic.

Hippocrates is referred to as the first epidemiologist. He observed and recorded. He looked at associations between diseases and environmental factors like diet, living conditions, geography, and climate. For example, Hippocrates observed that different diseases appeared in different places. From the scaffolding of observations and recordings he built a theory of disease causation and instructed that the human body was composed of four fluids called humors: blood, phlegm, yellow bile, and black bile.

The miasma theory of contagion, particularly in cholera, plagues, and malaria, is based on the humoral theory of Hippocrates and Galen. Malaria translates to bad air. Observing that malaria for instance, appeared in swampy places led people like Hippocrates to believe that the disease may be caused by air pollution (i.e., miasma). This was prior to the introduction of the germ theory of disease. The origin of many epidemics were attributed to a miasma. We now know that malaria is a mosquito-borne infectious disease. Thus, there were other variables playing the dominant role in driving malaria unbeknownst to Hippocrates and others.7John Snow, born in 1813, considered by many to be one of the fathers in modern epidemiology, was one of the first people to link germ theory to the 1854 Broad Street outbreak of cholera.

Figure 1. Are you a mosquito breeder? Malaria was a constant menace for GIs stationed in the Pacific and Mediterranean theaters during World War II. Image credit: The National Archives Catalog.

At its core observational epidemiology today is not entirely different than that of the practices of Hippocrates over 2,000 years ago. Epidemiologists make observations and associate risk factors with diseases. Statistics have refined it, yes, but we must not lose sight of this fact.

Observational epidemiology aided Percivall Pott’s investigations in the late 18th century linking a high incidence of scrotal cancer (later identified as squamous cell carcinoma of the skin of the scrotum) in chimney sweeps to soot. (This is one reason I stopped sweeping chimneys [Figure 3], by the way, and I’d recommend the same for any readers still bent on sweeping their own…) This finding sparked the search for more environmental carcinogens. Probably the two most well-known considerations of the successes of epidemiology are the identification that cigarette smoking is strongly associated with lung cancer8Small-cell lung cancer (SCLC), in particular. and John Snow linking contaminated water to an outbreak of cholera (favoring the germ theory over the miasma theory in 1854), which the discerning reader will note we paid tribute to with the map at the top of this post.

Figure 2. Peter Attia taking a quick break from the action during an apprenticeship under Ron Tugnutt in the streets of Scarborough, Ontario. Image credit: Anonymous (Unknown) [Public domain], via Wikimedia Commons

Perhaps the raison d’être of science is determining cause and effect. Observational studies inherently cannot usually establish causation or even infer causality. Strong correlations (i.e., associations) found in observational studies can be compelling enough to take seriously, but compelling is often in the eye of the beholder. Most observational studies are reported in terms of relative risk (for more on relative risk, refer to Part I of this series). Many journals publish articles every year reporting studies finding some environmental factor (e.g., a food, a drug, a behavior) that increases or decreases the risk of cancer or some other disease. Most of these findings are from observational epidemiology. Taken alone, and taking into account the absolute risks as well as relative risks (and the associated biases and confounding that we’ll discuss in more detail in an upcoming post), these findings don’t actually tell us if we’re at increased or decreased risk (only that it’s associated with risk), but in rare instances they may provide a clue to follow-up on. If so, we have an idea for an experiment.

Hypothesis-generating or hypotheses-testing?

Observational epidemiology is often considered to be hypothesis-generating. However, this is often inaccurate, since most observational epidemiology is hypothesis-testing. As Richard Feinman (“the other”) astutely observed:

If you notice that kids who eat a lot of candy seem to be fat, or even if you notice that candy makes you yourself fat, that is an observation. From this observation, you might come up with the hypothesis that sugar causes obesity. A test of your hypothesis would be to see if there is an association between sugar consumption and incidence of obesity. There are various ways—the simplest epidemiologic approach is simply to compare the history of the eating behavior of individuals (insofar as you can get it) with how fat they are. When you do this comparison you are testing your hypothesis. There are an infinite number of things that you could have measured as an independent variable, meat, TV hours, distance from the French bakery but you have a hypothesis that it was candy. Mike Eades described falling asleep as a child by trying to think of everything in the world. You just can’t test them all. As Einstein put it “your theory determines the measurement you make.”

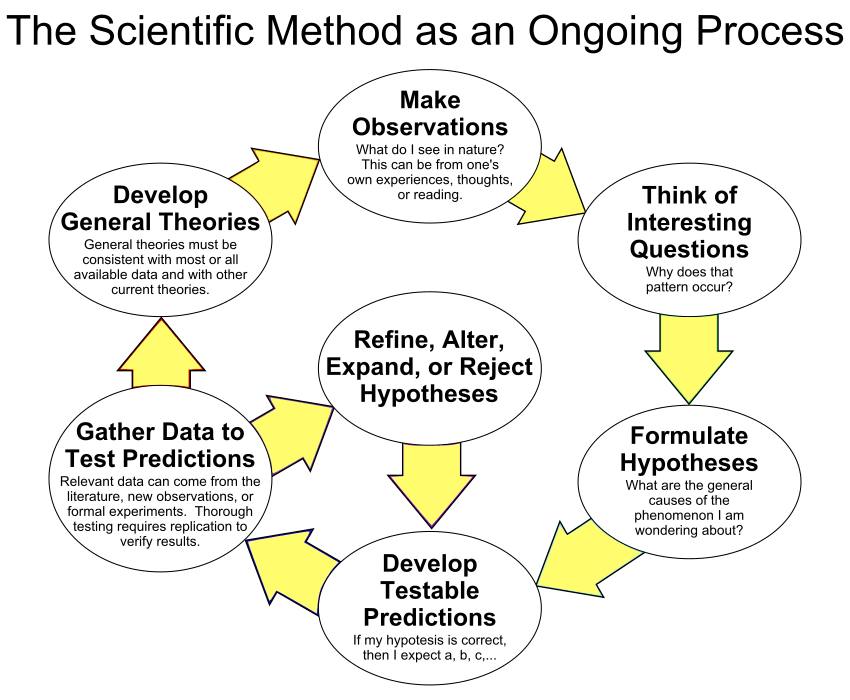

It’s just that most observational epidemiology cannot establish cause and effect (and therefore cannot soundly accept or reject a hypothesis). Observations are our first idea of things, as Bernard would say. It’s the first step in the scientific method:

- Make an observation

- Come up with a (falsifiable) hypothesis from that observation

- Conduct an experiment to test the hypothesis

- Accept or reject the hypothesis (if accepted, reproduce; if rejected, repeat, back to the drawing board, or discard)

Step #3 is the most important for determining causality—and finding out whether our eyes are playing tricks on us (which they so often do in science). It’s also the step that’s admittedly skipped or glossed over by the field in nutrition and public health. Doing the kinds of experiments necessary to establish reliable knowledge often are enormously expensive, take many years to complete and interpret, and are excruciatingly difficult to conduct with the rigor required. These are common refrains from investigators, politicians, and anyone who wants to know the answers to questions pertaining to health and disease. What do I need to do, or avoid, to live a long, healthy life and prevent myself and my loved ones from getting cancer, cardiovascular disease, and dementia? This is what many people want to know.

Figure 3. The scientific method as a cyclic or iterative process. By ArchonMagnus (Own work) CC BY-SA 4.0, via Wikimedia Commons.

Ideally, to answer those kinds of questions, public health decisions should be based on randomized experiments. Yes, conducting the kinds of experiments that will help establish cause and effect are expensive, time-consuming, and challenging (if not outright impossible at times) to conduct. But these challenges don’t absolve us from doing them if we want to know if what we know is really so, as Robert Merton would say. Typically, doing more and more observational studies don’t get us any closer to the truth.9The French naturalist Georges Cuvier is believed to have said, “The observer listens to nature; the experimenter questions and forces her to unveil herself.” It may be arguable that observational epidemiology is actually more expensive than doing experiments if it’s being judged on the reliable knowledge it’s returning on the investment required to conduct observational studies.10“Men sometimes seem to confuse experiment with observation,” writes Bernard. And he goes on to quote the 18th century Swiss physician Johann Georg Zimmerman, who said, “An experiment differs from an observation in this, that knowledge gained through observation seems to appear of itself, while that which an experiment brings us is the fruit of an effort that we make, with the object of knowing whether something exists or does not exist.”

The randomized-controlled trial (RCT) is often considered the gold standard to determine the risk or benefit of a particular intervention. So why don’t we see more RCTs in the field of epidemiology?

That’s where we’ll pick up next time. Why not just run an RCT? What is the motivation for an observational study, what are the nuts and bolts, and what can (and can’t) these studies tell us? We’ll cover this and more in Part III of Studying Studies. If you missed Part I on relative risk, give it a read.