If I told you that I read a randomized double-blinded placebo-controlled trial conducted over 5 years and carried out in over 18,000 participants, is there any scenario under which you would not believe it to be an excellent trial?

Randomized-controlled trials (RCTs) are considered the gold standard for establishing reliable knowledge and for establishing cause and effect. By randomly allocating alike participants into a treatment or control group, much of the bias encountered in observational studies is reduced substantially. But if there’s one thing you learn by reading beyond the abstract, there’s more than meets the eye.

Case in point: last month JAMA published the results of such a trial design entitled, “Effect of Long-term Vitamin D Supplementation vs Placebo on Risk of Depression or Clinically Relevant Depressive Symptoms and on Change in Mood Scores.”

The opening line from the paper establishes the study’s clinical importance: “Low levels of 25-hydroxyvitamin D have been associated with higher risk for depression later in life, but there have been few long-term, high-dose large-scale trials [of vitamin D supplementation].”125-hydroxyvitamin D, or 25(OH)D for short, is a prehormone that can be easily measured by a blood test and is considered the best indicator of vitamin D status. For clarity, I’ll refer to 25(OH)D levels as vitamin D levels from here on out. Also, participants in the trial received a vitamin D supplement in the form of cholecalciferol, also known as vitamin D3, but I’ll refer to it as “vitamin D” to avoid confusion.

Vitamin D deficiency is generally defined by vitamin D levels below 20 ng/mL. To determine whether raising vitamin D levels reduce depression risk, the participants were randomized to either a daily placebo or a vitamin D supplement containing 2,000 IU. Here’s the upshot of the paper from the perspective of the authors: the study’s findings did not support the use of vitamin D in adults to prevent depression. The investigators did not find statistically significant differences in their primary outcomes: the total risk of depression or clinically relevant depressive symptoms or the long-term trajectory in mood scores.

Given its strengths, you may be surprised that I think this trial was far from amazing. So, what are my problems with this trial and why don’t I think it generated reliable evidence in support of its conclusions? What follows might be best described as a Journal Club Light. I’m planning on recording and sharing a more in-depth Journal Club discussing this paper (and/or other papers like it) with my team.

There are a few questions I asked myself before accepting the findings in this trial and I’ll expand on some of them below. These questions, while by no means exhaustive, should demonstrate why you always need to look below the surface (e.g., the abstract or press release), even when what you’re looking at is considered the gold standard of evidence.

- How rigorously was the variable of interest isolated?

- Did they reach their target endpoint (i.e., what were the follow-up vitamin D levels?)?

- What were the baseline characteristics?

- What was the primary outcome and how was it determined?

- What was the adherence and how was it monitored?

- What was the dose of the intervention?

How rigorously was the variable of interest isolated?

A total of 9,181 participants were randomized to receive vitamin D and 9,172 were randomized to receive a placebo. Given the trial was randomized, double-blinded, and placebo-controlled, it seems safe to assume they isolated vitamin D supplementation from confounding variables quite well.

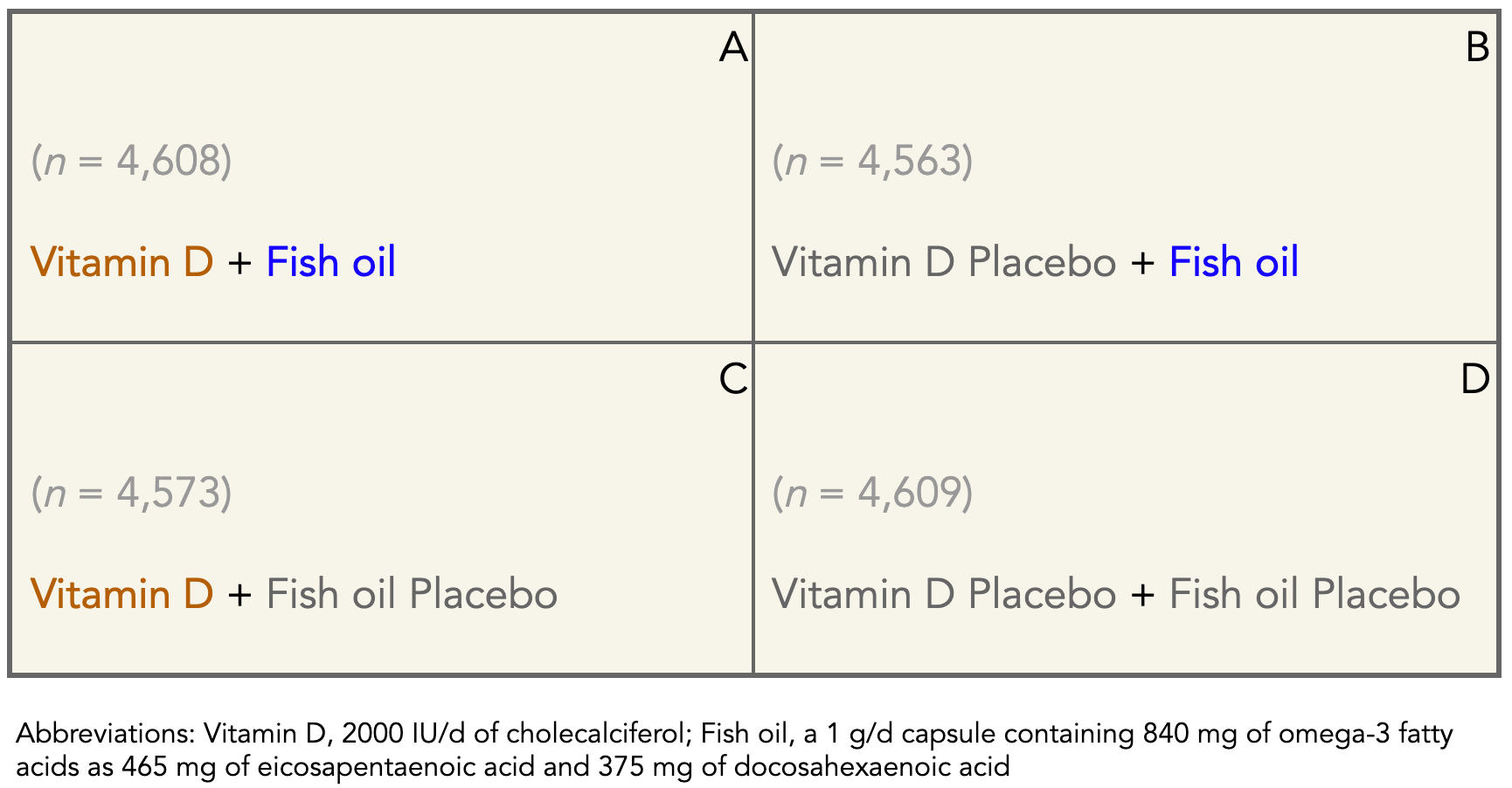

However, the intervention actually employed what is called a 2 x 2 factorial design. A factorial design is one involving two or more factors in a single experiment. This study didn’t just look at vitamin D supplementation, it also included fish oil supplementation. This means that of the 9,181 participants randomized to vitamin D, half (4,608) were randomized to fish oil and the other half (4,573) to a second placebo, as the Table below shows.

Table. 2×2 factorial design of vitamin D and fish oil supplementation.

Yet, when examining the primary outcome—risk of depression or clinically relevant depressive symptoms—the investigators lumped the vitamin D + fish oil and vitamin D + fish oil placebo (Boxes A & C in the Table) groups together and referred to it as the “vitamin D group” throughout the paper, including the title, and omitting the fact that half of the vitamin D intervention group were also randomized to fish oil (Box A in the Table). Taking a vitamin D supplement alongside a fish oil supplement might have a different effect than taking a vitamin D supplement alone. Given the title of the paper and the clinical question, why wouldn’t the investigators exclude the groups taking fish oil to eliminate this variable?

Did they reach their target endpoint (i.e., what were the follow-up vitamin D levels?)?

We don’t know. They weren’t measured at follow-up.

Remember the question the investigators are trying to answer in this trial. If low levels of vitamin D are associated with increased risk of depression later in life, will increasing vitamin D levels by supplementing with vitamin D decrease depression risk? The problem is we don’t know what vitamin D levels looked like in the vitamin D group compared to placebo after 5.3 years, the median duration of treatment.

Let that sink in for a moment.

In a double-blind placebo-controlled randomized clinical trial addressing whether increasing levels of vitamin D can prevent depression, the investigators did not confirm whether, and by how much, vitamin D supplementation increased vitamin D levels in their participants.

Instead, the investigators tested vitamin D levels in a subgroup, less than 10% of all participants, repeating vitamin D measurements at baseline and after 12 months (in a 64-month study), and found that mean vitamin D levels increased from 31 ng/mL at baseline to 42 ng/mL at 1 year and changed minimally (less than 1 ng/mL) in the placebo group.

I don’t think we should assume what vitamin D levels were in the participants after 5 years of follow-up. The benefit of well-designed RCTs is the elimination of assumptions like these.

What were the baseline characteristics?

Did all of the participants have low levels of vitamin D at baseline? Only about 11% of participants were considered vitamin D-deficient to begin with. Baseline values for all participants was a mean of 31 ng/mL. (These baseline values also appear to be drawn from a subgroup of individuals. The mean data was gathered from 11,417 participants, which is about 60% of the total number of participants in the study.)

Since low levels are associated with increased depression risk, this is the population of greatest interest. One possible reason there wasn’t a reported effect in this trial is that many participants were already at a vitamin D threshold for health benefits at baseline and not likely to see an observable effect of supplementing with vitamin D.

If we want to test whether low levels of vitamin D contribute to depression risk, and that increasing these levels can prevent depression risk, we want to make sure that 1) participants have low levels at baseline and 2) the intervention group increases their levels while the placebo group remains unchanged at the time of follow-up. Otherwise, the study doesn’t address whether ameliorating vitamin D deficiency reduces the risk of depression later in life.

What was the primary outcome and how was it determined?

The primary outcomes were the total risk of depression and longitudinal mood scores. Knowing how the primary outcomes were determined are often as important as the primary outcomes themselves. In the trial, they were determined by a self-report of depression diagnosed by a physician, treatment for depression, or presence of depressive symptoms via an annual questionnaire called a PHQ-8.

The Patient Health Questionnaire depression scale, or PHQ-8, is a questionnaire that participants in the study self-reported annually. Admittedly, “Self-reported mood and depression variables were of uncertain validity,” according to the investigators. However, they also argued that even if the self-reported cases of depression were actually over- or underestimated, the results would be consistently inaccurate between the vitamin D group and placebo. Whether you agree or disagree with this argument, keep in mind that the primary outcome of a double-blinded placebo-controlled RCT was determined by a self-reported questionnaire on mood and depression variables. The proportion of self-reported depression diagnosis by a physician, treatment for depression, or presence of depressive symptoms on the PHQ-8 rating scale was not reported. I suspect most depression cases were based on the PHQ-8 rating scale (which is almost assuredly the most prone to error) but it would be useful for the investigators to report this information.

What was the adherence and how was it monitored?

Participants were followed up annually via mailed questionnaires to update information on study pill adherence rates, defined as taking at least two-thirds of pills as assigned.

Adherence with study pills was 90% or greater in both treatment groups at all assessments: about 95% adherence after year 1 and 90% at year 5.

Similar to the primary outcome data, this is self-reported information on a questionnaire. Maybe this data is accurate, but don’t assume that just because you’re looking at an RCT that participants are necessarily more carefully monitored than an observational epidemiological study.

What was the dose of the intervention?

The daily vitamin D dose was 2,000 IU/d and the fish oil dose delivered 840 mg of omega-3 fatty acids (465 mg of EPA & 375 mg of DHA). In my opinion, the vitamin D and fish oil doses are too low to have an effect. In other words, this trial was essentially comparing placebo to placebo.

Admittedly, when I first set eyes on this paper, I wanted to know the dose of vitamin D used since I think most if not all trials I’ve seen to date are not using a high enough dose to have a physiological effect. But the proof is in the pudding and I figured I could test my bias by seeing what happened to the vitamin D levels of the participants at the end of the study, especially in the participants who were deficient at the start. Unfortunately, this information was not provided.

If you just look on the surface, you might see a large-scale, long-term, randomized double-blinded, placebo-controlled trial and assume whatever it finds provides reliable evidence. But I hope I’ve convinced you that the devil is often in the details, regardless of the type of study conducted.

– Peter

They under dosed them because they don’t want it to work. This test was designed to fail. Like many propaganda driven tests. Like Peter said, you have to read past the title/headline and read the whole report/study! Vitamin D is really amazing and there are many other good studies that show Vitamin D is very beneficial. Semper Fi

What dose of vitamin D do you think would be sufficient to see a physiological effect?

Here’s a rhetorical question…

What did this study ‘factually’ (ie. by the numbers) show?

Aside

The graph in Aloia JF, Li-Ng M: Epidemic influenza and vitamin D. Epidemiol Infect 2007; 135: 1095–1096. is so compelling, though the it really requires a much greater n.

Goes to show that people can have all the info-wars they want pointing to studies on the internet, but unless you can take a study apart like Peter does here, most of us can’t determine if it’s legit or not.

Over time, we look to scientists and learn who to listen to and trust by their standards of behavior and consistency of action. One example is when they regularly are able to admit they were wrong among others.

As you point out, the lack of baseline levels selection hurts statistical power since your non-deficient subjects will show no effect, however if there was any substantial effect, with 11% of 18,000 subjects the significance should still get through the roof. I previously believed that a large fraction of the American population was D3 deficient; what is the consensus estimate? Besides this, the analysis factors *must* include baseline levels and also gender. Does the article full text (the link you provided to the abstract doesn’t allow full text consultation unless you are subscribed to JAMA) at least give the actual results broken down in their 2/2 matrix? Did the authors at least look at the interaction? If the interaction is significant nothing can be said about the main effects–that is statistics 101…

I have found an even worse RCT that has so many biases it’s almost criminal. They basically made an online form that asked questions to social media users for symptoms of COVID. Then they either sent by mail a placebo or hydroxychloroquine to the internet user with claimed symptoms, with no doctor involved in the diagnosis. Their online form had 9000+ applicants and they ended up with approx 800 using their custom “filters”. The study was touted as RCT, a gold standard, by the media and it was used to make recommendations to the CDC! It was basically a glorified online form masquerading as research.

The insane study:

https://www.nejm.org/doi/full/10.1056/NEJMoa2016638

They actually did look at the possible confounding by the fish oil takers: “There were no significant differences in the effects of vitamin D3 on risk of depression or clinically relevant depressive symptoms among subgroups (Figure 4), *including* the fish oil group vs the fish oil placebo group; tests of interaction were not significant.” Interestingly, however, when you look at Fig. 4, there’s certainly a *suggestion* of an effect; it’d’ve been great to have gone a couple of steps further and compared baseline-deficient (and also insufficient (30 ng/mL) post-trial-replete, vitamin-D-only vs. all-placebo groups.

So many of these studies using vitamins and minerals show their usage as failing for some reason or another. Vitamins and minerals are not included in the medical mafia’s regimen for treatment of disease and prevention of disease. That is because their usage would disrupt the use of much higher priced and largely ineffective drugs. As an experiment, lets feed a group of people all the food and water they want but without any vitamins and minerals contained in it. Let’s see what happens over time. Then they can go to the doctor and get all the drugs they want to fix themselves. The RDA’s set by the FDA for vits and mins are mostly ridiculous and virtually useless if you are over the age of 30-40. As consumers of medical services, we are being hoodwinked by Big Pharama who controls medical schools, most of the medical system (where drugs are at the forefront of everything) most of the testing agencies, most doctors, the medical paper publishing, and outlandish lobbying of Congress with millions of dollars spent to control legislation. And of course vaccines which are seldom, if ever more than 50% effective and may cause serious damage to the body over time. I will always trust vitamins and minerals before I trust even my doctor who, bless his soul, has led me down the wrong path a few times. Drugs, drugs and more drugs…they can’t give you enough. You have to wonder how people survived before the invention of cure-all drugs.

Dr Robert Heaney is one of the worlds leading experts on Vit D. There is a great You Tube presentation “Design components and interventions/ studies of Vit D”.

Rules for Nutrient Studies.

For a Nutrient study to be informative.

1. Basal nutrient status must be determined and used as an inclusion criteria.

2. The change in intake must be large enough to change nutrient status meaningfully.

3. Change in nutrient status, not nutrient intake, must be the independent variable in the hypothesis.

4. Change in status must be quantified.

5. Co-nutrient status must be optimised.

He states that many studies have failed one or more of these key rules.

Looks like this study is no different.

That study was a waste of time, money and resources. No knowledge gained, congrats to the “scientists” at work there.

Thanks for that, Peter. It’s pretty pathetic how so-called scientist carry out studies like this, surely getting huge grants for them, and manage to do them as unscientifically as they can be done. Doesn’t anyone up there know how to design and carry out a trial to test the effects of a single isolated variable? Geez!